But what exactly is measurement computing, and why does it matter? It’s the science of capturing real-world data, converting it into digital format, and interpreting it using computer systems. It’s about making sense of the world around us, in a language that machines understand. So, buckle up and get ready to delve into this intriguing world of measurement computing, where the physical and digital worlds intriguingly intertwine.

Measurement Computing

Measurement computing acts as a fundamental part of many scientific, industrial, and technological applications. It makes use of various tools and techniques to gather, interpret, and utilise real-world data.

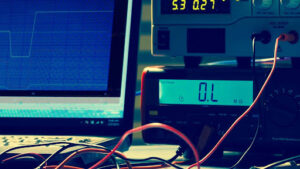

Primarily, measurement computing encompasses three basic elements: sensors, analog-to-digital converters (ADCs), and computer systems. For example, sensors measure parameters like temperature, pressure, or humidity to generate an analog signal. The ADCs then convert this analog signal into a digital form such as binary code. Lastly, the computer systems parse this digital data and convert it into meaningful information.

In terms of functionality, measurement computing devices express precision, accuracy, and resolution. Precision refers to the repeatability of measurements. Accuracy denotes how close a measurement is to the actual value. Resolution, on the other hand, encompasses the smallest increment of measurement a device can discern.

Regarding applications, measurement computing finds prevalent use in industries and scientific research. In weather stations, it gives precise data on meteorological parameters. In industrial production lines, it monitors and controls parameters like temperature and pressure to ensure safety and efficiency. On a larger scale, it plays a significant part in aerodynamics research, environmental monitoring, and even in everyday applications such as home automation systems.

The Evolution of Measurement Computing

Emerging from modest beginnings, measurement computing underwent a drastic transformation shaped by technological advancements. Early iterations featured bulky, standalone devices such as the slide rule and mechanical calculators, primarily dedicated to simple computations. These initial tools, though limited by today’s standards, marked the dawn of an era where measurements became intertwined with computation.

As the 20th-century unfolded, measurement computing leaped forward with the advent of electronic computers. The room-sized ENIAC, completed in 1945, demonstrated a stark contrast to former rudimentary devices. It leveraged vacuum tube technology, delivering significant computational power. However, it lacked practicality, considering its enormous size and high electrical usage.

Another substantial breakthrough came in the form of microprocessors in the 1970s. This era introduced compact, efficient computational capabilities. Systems such as Intel’s 4004, touted as the first microprocessor, revolutionised measurement computing by providing tools for complex computational tasks in minute devices.

The invasion of the Internet in the 1990s spurred further transformation. With data acquisition systems (DAS) representing a significant part of measurement computing, the Internet provided a platform for remote management of such systems. Consequently, connectivity and accessibility to DAS received exponential enhancements.

Exploring Different Types of Measurement Computing Technologies

Measurement computing technologies have diversified since primitive tools like slide rules. Today, they encompass a broad spectrum of tools, systems, and methodologies designed to cater to different needs and applications. These technologies primarily deal with data acquisition, processing, display, and storage.

In the realm of data acquisition, an array of technologies offer precision and efficiency. Sensors, for instance, play an integral part. These devices translate physical parameters into electrical signals. Thermocouples that measure temperature in industrial processes, strain gauges that track stress in civil structures, and light sensors regulating automation systems exemplify such devices.

Next in line, signal conditioning technologies have gained prominence. These technologies amplify, filter, or isolate signals to make them compatible for further processing. Amplifiers increase weak signals, isolators separate electrically noisy signals, and filters shape the signal for specific applications. Devices like signal conditioners and data loggers support these processes.